The Grammar of Seeing

A site-specific art installation at Google’s AI headquarters by Ahna Girshick, 2025

188 Embarcadero, San Francisco

UV-cured inks, mylar film, custom machine learning software

The Grammar of Seeing invites viewers to step inside the architecture of Google’s landmark Inception v1 vision model. Looking through each pane of glass is an act of perceiving through an artificial lens — a scaled-up metaphor for how AI perceives the world. As one ascends the floors of Google’s AI building at 188 Embarcadero, the imagery traces the simple-to-complex attunement of deep neural networks: edge detectors on the 4th floor; increasingly complex shapes and textures on the 5th; quasi-facial features on the 7th. Together, these layers reveal the hidden visual grammar of Inception v1 and its descendants. Human, animal, and machine visual systems all evolve similar sensitivities from simple to complex patterns, suggesting a shared perceptual logic — a universal grammar of vision across biological and artificial intelligence.

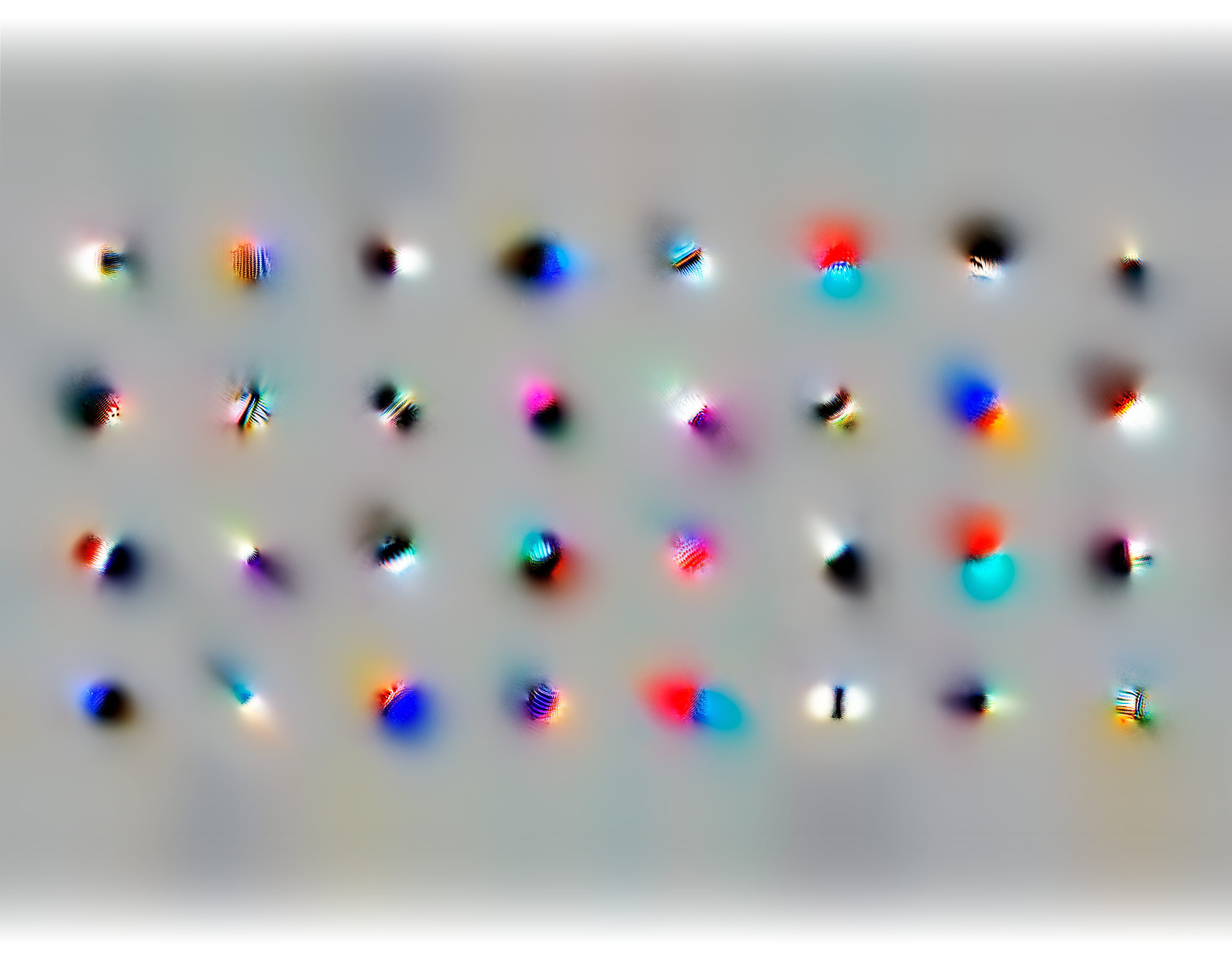

In the Inception v1 model, the first two layers -- conv1 and conv2 -- focus on understanding light patterns in terms of basic edge structure. Eighty units from conv2 are depicted across four windows on the fourth floor, each specialized to detect an edge in a particular orientation and color pattern.

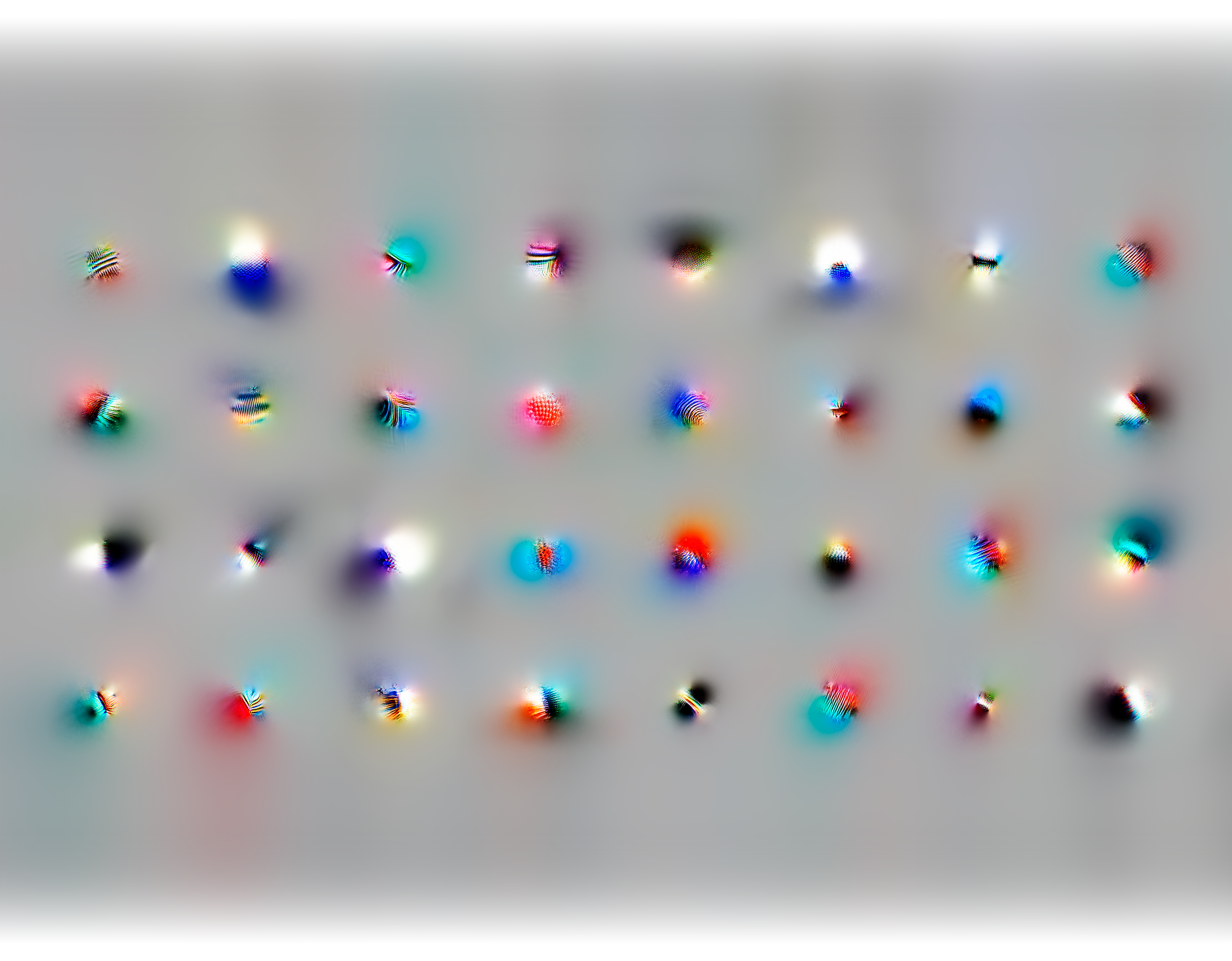

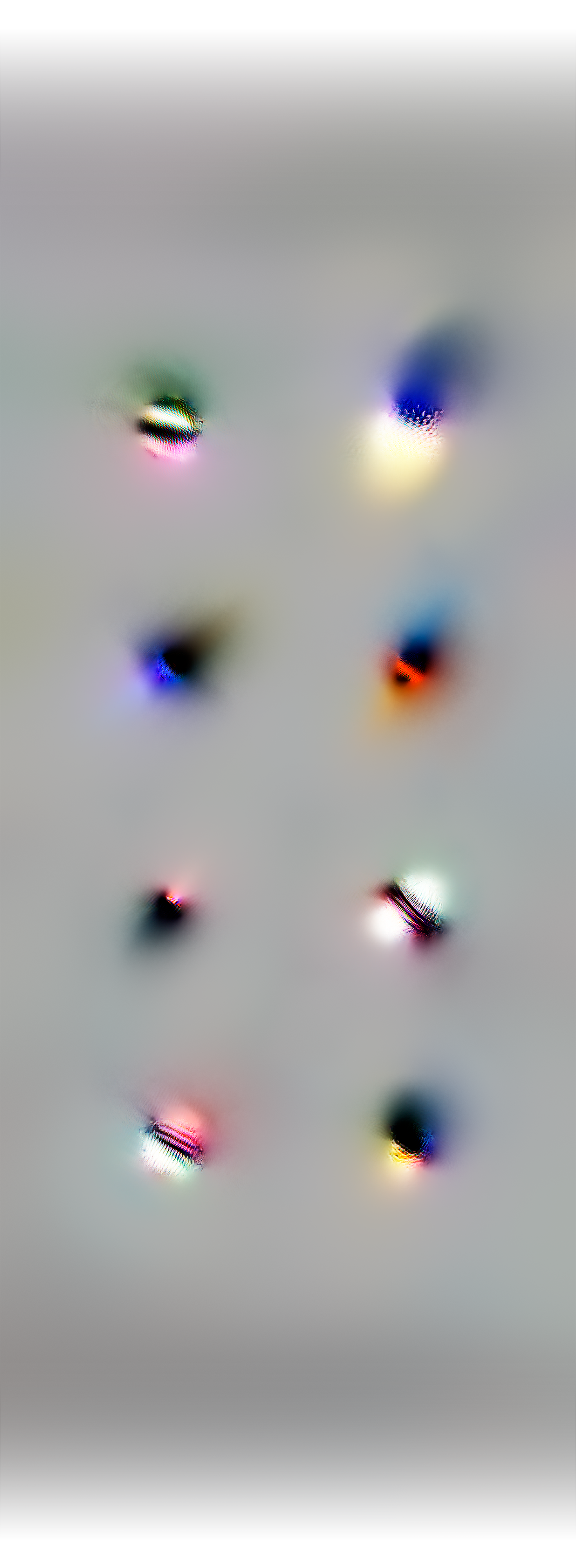

The output of the edge detectors is fed through a first inception unit, producing "3a output". Across 8 windows on the 5th floor, including two 18' panels, these units are each specialized to detect simple abstract patterns.

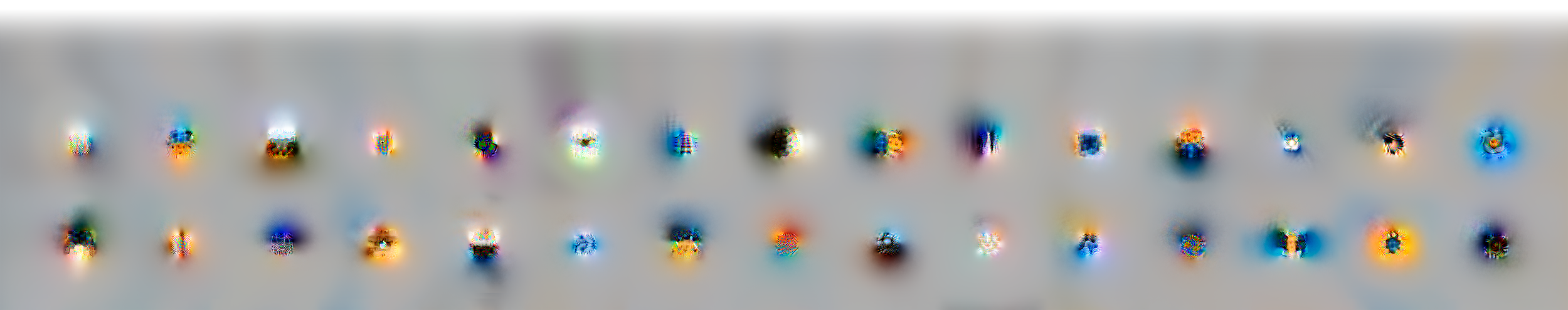

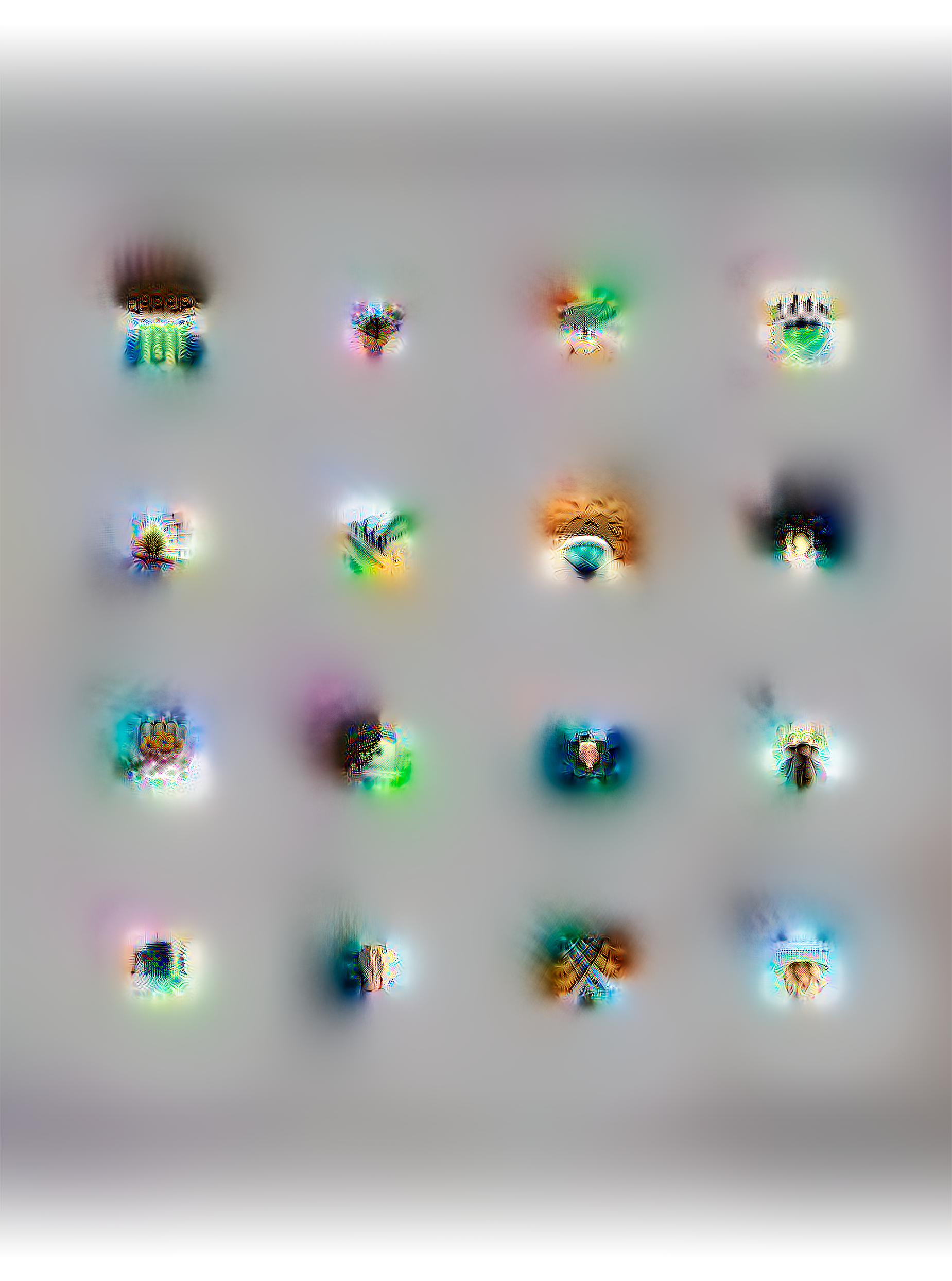

On the 7th floor is the output from the next inception unit, 3b, builds upon 3a with more complex forms, vaguely recognizable quasi-facial features.